Incrementally Better #4

On the 95% relationship rule and the broken trust triangle of agentic AI

Sigh. It took me way longer to publish this edition than planned - for excuses not worthy of this audience. I will probably start publishing shorter but more frequent updates in the future rather than trying to cram multiple posts and recommendations into one long edition. If you have any strong opinions on this, let me know at stephangeering@pm.me!

The 95% rule

Gather around, friends. A 50-year-old man is sharing some of his wisdom1. It has helped me with my relationships. Hopefully it can do the same for you.

Who doesn’t know this feeling: Your partner has filled the dishwasher the wrong way, it falls on you again to arrange a get-together, the toothpaste has been squeezed wrongly … again. You are angry. Why? These small annoyances are not really such a big deal on their own. What’s irritating is that they confirm a pattern, a habit that you have observed and that you now focus on obsessively. It’s not an outlier, it happens constantly! That’s why these events can be the last straw. Simmering annoyance explodes into a big argument.

I have certainly been there. What we tend to forget in that moment is what we love about that person. Their smile, their consoling words when you need a shoulder to cry on, the fun and banter. All taken for granted and ignored, because our anger focuses all our attention on that small issue. 95% of wonderful qualities pushed aside by all-encompassing anger about the trivial 5%.

It’s very easy to analyse this calmly after an argument. But it’s very hard to remember it right there in the moment. I have therefore tried to internalise this as a rule. Whenever I see a bad habit, I try to remember the 95%. This has helped me put things in perspective. Plates left dirty in the sink again? - I don’t get angry because I think about the 95% of wonderful qualities that more than make up for it.

The 95% rule of course doesn’t mean accepting that these annoying habits will never change2. They can change. But if they don’t change right away, the 95% rule puts them and your relationship into perspective. Your partner droning on about their relationship theory? 95%, baby, 95%!

Uplifting content

Bit of a palette cleanser before we jump into the main bit:

Little delight: The beauty of portable music, NYC and roller-skating in one clip:

Sound bite: Quite possibly the most beautiful piece of electronic music - Soulwax - Close to Paradise (Instrumental)

Eye candy: China Camp State Park, San Rafael, California, US by Nathan Wirth:

Agentic AI: The broken trust triangle

Ever since agentic AI emerged as the next hot thing, I have been sceptical. So much could go wrong when deploying it on the untamed internet. Having read more about agentic AI this year, I have turned my instinctive scepticism into a somewhat more solid theory of the trust issue, which I have decided to call “the broken trust triangle”. What is the broken trust triangle? To explain it, we need to start with the basics: The definition of agentic AI.

But what is agentic AI exactly?

Definitions, shmefinitions? No! - Definitions do matter. If we can’t define it precisely, we clearly don’t understand how agentic AI differs from (generative) AI3. And understanding the difference between agentic AI and generative AI is of course a prerequisite for assessing whether this difference creates incremental risks that call for additional safeguards.

So which definition to use? I quite like the IEEE’s4 definition:

“Agentic AI refers to artificial intelligence systems that can act independently to achieve goals without requiring constant human control. These systems can make decisions, perform actions, and adapt to situations based on their programming and input data, without human input.”

While not explicitly reflected in the IEEE definition, an additional important element is the ability to interact with third-party websites and tools through protocols like Anthropic’s Model Context Protocol (MCP). Think of your AI assistant booking your holidays on the web or creating a priority list of your unread books in Excel.

With the IEEE definition and the mentioned tool use capability, we have the following characteristics that distinguish agentic AI from other types of AI:

(1) Increased level of autonomy

(2) Decision-making without human input

(3) Interaction with third-party websites and tools

Incremental risks of agentic AI

These characteristics have two effects from a risk management perspective. First of all, they may increase general AI risks such as accuracy and bias (multi-step actions may compound inaccurate or biased output)5. Secondly, these characteristics may result in completely new risks. For this post, I want to focus on the integrity & trust issue6 as this has not been widely covered in AI governance discussions.

To understand the integrity concern, I highly recommend Simon Willison’s article on the ‘lethal trifecta’. The integrity aspect is one leg of Willison’s ‘lethal trifecta’: Generative AI cannot distinguish between trustworthy and deceptive instructions. Every instruction is by default considered trustworthy. This is not a big issue if generative AI is deployed in a contained, trustworthy environment (for instance when deploying M365 Copilot in your company’s environment).

But agentic AI is not always deployed in a contained, trustworthy environment. It is intended to interact with a wide variety of third-party tools and websites that may include untrusted or even malicious content. As Willison points out, an AI agent accessing websites with malicious content can lead to the manipulation of AI agents and exfiltration of data. Bruce Schneier (in ‘Agentic AI’s OODA Loop Problem’) expands on this concept and explains how the integrity issue impacts every aspect of the agentic AI decision-making process (using the ‘OODA Loop’ model):

“AI OODA loops and integrity aren’t fundamentally opposed, but today’s AI agents observe the Internet, orient via statistics, decide probabilistically, and act without verification. We built a system that trusts everything, and now we hope for a semantic firewall to keep it safe. The adversary isn’t inside the loop by accident; it’s there by architecture. Web-scale AI means web-scale integrity failure. Every capability corrupts.”

Ouch, that doesn’t sound great.

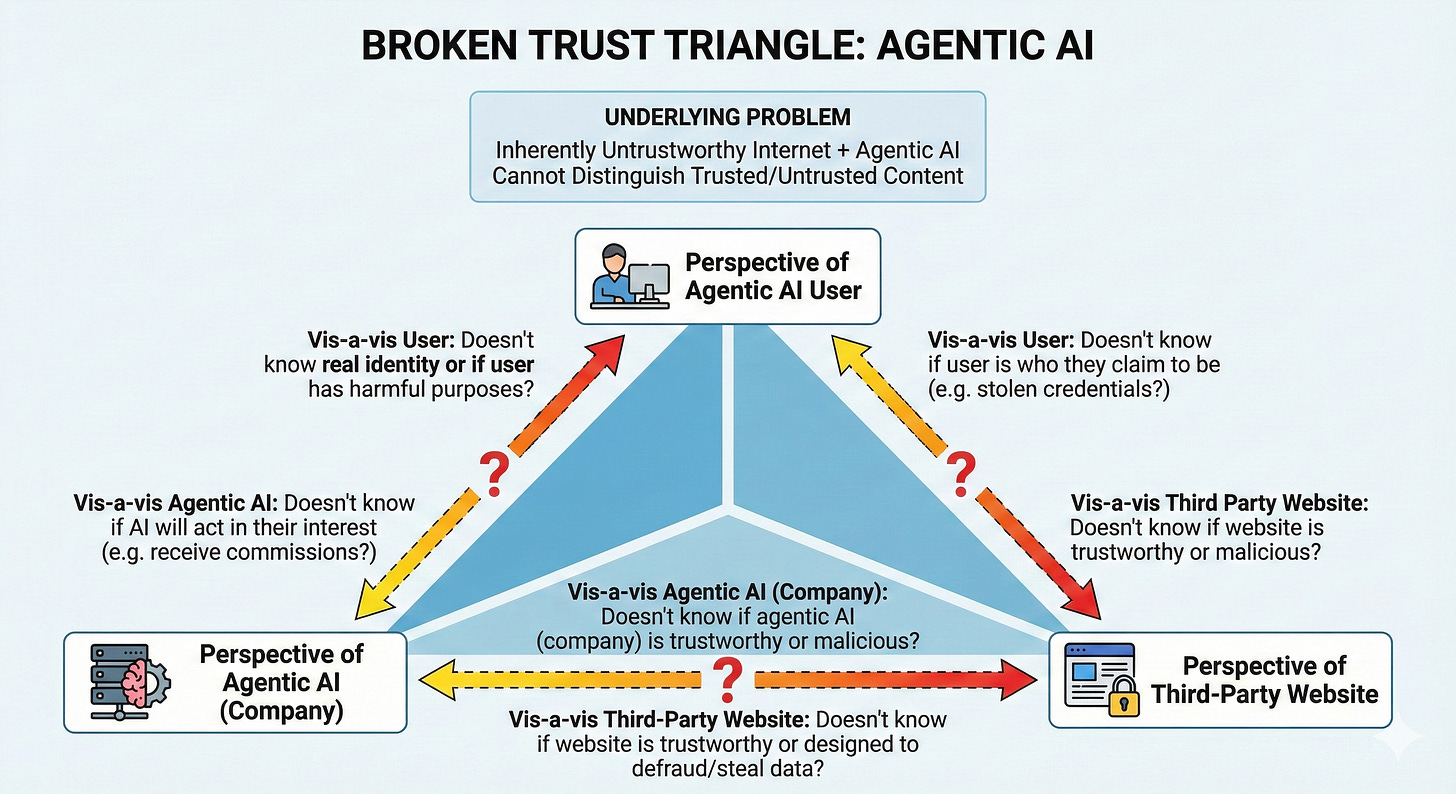

The broken trust triangle

If you now combine this integrity risk with the increased autonomy and decision-making capabilities, you create a qualitatively different risk compared to human interaction: The “broken trust triangle”. It’s a triangle of relationships where no one can fully trust the other parties: The third-party website operator won’t know if it’s really me trying to purchase the heated cushion for desk chairs7 or if it’s someone who claims to be me. The agentic AI company can’t tell if the website claiming to sell heated cushions for desk chairs is legitimate or just trying to steal my data. And so the broken trust triangle goes on (see picture below).

This is not helped by the fact that the commercial interests between agentic AI companies and third-party websites will often not be aligned (no surprise, then, that Amazon blocked the ChatGPT shopping agent).

Another big question - to be tackled another time in more detail - resulting from the broken trust triangle: How do we ensure agentic AI acts in the best interest of the user? Whether it’s purchasing decisions, life choices or voting - the risk resulting from the ability to influence millions of agentic AI users, even just slightly on the margins, is significant. One possible idea is a fiduciary duty for agentic AI companies to act in the interest of users. But such a duty is - so far! - not included in the EU AI Act or other AI laws.

As a result of the broken trust triangle, I suspect it will be a bit of a wait until we can ask our AI assistant to book our holidays. Similar to testing autonomous taxis in cities with beneficial conditions, agentic AI will likely need piloting in controlled and trustworthy environments (e.g., company-internal or within an environment where all parties are authenticated and vetted) before it can be released in the wild west of the internet.

My posts elsewhere

My recent posts on LinkedIn and Bluesky:

Podcast on expert vs public perception of AI (LinkedIn)

Thread covering IAPP DPC25 - be prepared for random and irreverent posting (Bluesky)

‘AI as Normal Technology’ vs ‘AI 2027’ (LinkedIn)

‘Privacy People’ screening - swan song on the golden era of privacy pros? (LinkedIn)

When GDPR simplification was just a glint in the EU Commission’s eye (Bluesky)

CNIL event on economic impact of GDPR (LinkedIn)

Beauty is everywhere (Bluesky)

Recommended reading and listening

Reading: Privacy books for your Christmas list

Daniel Solove has updated his overview of privacy books past and present including a short summary of each book. Just in time for your Christmas list and/or present purchases!

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5277793

Listening: Introduction into AI governance

For a great introduction into building an AI governance program, look no further than this series of three short episodes (each around 16 mins) of Bristows’ The Roadmap podcast (generally worth subscribing to).

Vik Khurana and Simon McDougall explain the basics of AI governance in plain language, emphasising the importance of building on and integrating with existing risk management processes and controls.

First episode: Identify & Assess

Second episode: Design & Implement

Third episode: Manage & Monitor

Reading: Insuring AI risks

“Insurers increasingly view AI models’ outputs as too unpredictable and opaque to insure ...”

Of course insurers would claim this, cynics may say. They want to limit their exposure. But I am not sure this is a good sign. Interesting FT article on how insurers are (un)willing to underwrite AI risks.

https://www.ft.com/content/abfe9741-f438-4ed6-a673-075ec177dc62 (£)

Reading: Too much attention? How context rot could impact LLM progress

This is a fascinating read on why the quest for larger context windows (which help models be more consistent and personalised), is an architectural problem that leads to ‘context rot’

“... an LLM effectively “thinks about” every token in its context window before generating a new token. That works fine when there are only a few thousand tokens in the context window. But it gets more and more unwieldy as the number of tokens grows into the hundreds of thousands, millions, and beyond.”

Reading: Nested Learning

One of the key limitations of LLMs: They can’t update their knowledge or acquire new skills from new interactions once deployed. This is like your junior research assistant not learning anything on the job. Google has published a new approach called ‘Nested Learning’ that could resolve this (and possibly also address the ‘context rot’ issue mentioned above). It allows models to learn from data using different levels of abstraction and time-scales, much like the brain. This is not just a theoretical model, Google built an architecture called ‘Hope’ that appears to have performed well at tasks that are challenging for LLMs.

https://venturebeat.com/ai/googles-nested-learning-paradigm-could-solve-ais-memory-and-continual

Listening: The geopolitics of AGI

Insightful 80,000 Hours podcast episode with Helen Toner. Yes, the former OpenAI board member. If you are interested in more long-term development of AI and the related risks, Toner is one of the most balanced and interesting voices in my view. The episode (2 hrs 20, but worth it) covers the geopolitics of AGI (winning the AI race, China, UAE), the risks of AI concentration and on her preference for ‘steerability’ over alignment.

Reading: ‘AI as Normal Technology’ vs ‘AI 2027’

What’s better than you exercising your dialectical synthesis skills through comparing ‘AI as Normal Technology’ and ‘AI 2027’? - The authors of those articles coming together and doing this work themselves in the article linked below. In the article, they succinctly lay out their common assessment and positions.

My very high-level summary: https://www.linkedin.com/feed/update/urn:li:share:7395849506791051264/.

Reading: Conscious AI?

Intriguing (but not short) AI Frontiers article by Cameron Berg on the question of consciousness in current frontier models. It starts with the definition of consciousness and deals with the standard counterarguments before presenting the case. Based on existing evidence, it concludes that there is a “nontrivial probability of AI consciousness.” I am sceptical, but this is a well-argued piece.

It also points out - correctly in my view - that overlooking consciousness has far greater consequences than mistakenly attributing it where it’s absent.

https://ai-frontiers.org/articles/the-evidence-for-ai-consciousness-today

P.S. MIT (https://airisk.mit.edu/) have captured welfare of conscious AI as a risk (see 7.5) in their comprehensive Domain Taxonomy of AI Risks overview.

It is more of a distillation of wisdom I soaked up from my favourite intellectuals such as Heather Cox Richardson, Brené Brown, Ezra Klein, Scott Galloway and Daniel Schmachtenberger (all worth your time and attention) mixed with the lessons learned from my relationships rather than a fully original thought - but isn’t that how creativity works anyway?

I hope it’s clear from this piece, but just to be very explicit: This rule obviously doesn’t apply to toxic behaviour. Toxic behaviour is not the same as the minor annoying habits I talk about and that can be excused with the 95% rule. Toxic behaviour is unacceptable.

The lack of definitional clarity between agentic AI and generative AI will have been painfully apparent to folks attending agentic AI sessions and webinars recently. On offer is often just a repetition of the well-established generative AI risks and controls. That’s of course mildly helpful. Hence my insistence on the definition.

By the way, the IEEE has been one of the pioneering organisations working on responsible AI since 2016!

For a great article on the incremental risks of agentic AI and the additionally required (compared to other AI) safeguards look no further than this article by Jey Kumarasamy: https://iapp.org/news/a/understanding-ai-agents-new-risks-and-practical-safeguards/.

Technically, I guess you would have to separate these: Integrity is the risk at hand. The trust issue is technically the consequence of the risk.

What, heated desk chair cushions? Yes! A great way to keep you warm in your home office without having to remortgage your house to afford the heating costs. I can recommend Stoov’s Big Hug (other products are of course available).